- Thank you received: 0

Nefertiti's Family

- tvanflandern

-

- Offline

- Platinum Member

-

Less

More

18 years 11 months ago #10674

by tvanflandern

Replied by tvanflandern on topic Reply from Tom Van Flandern

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by neilderosa</i>

<br />I take it you are saying that even though the human eye can see the dark spots, it is legitimate to clean them up somewhat by the "smoothing" process, etc. because that's what the human eye does to some degree anyway, and because this aids us in seeing the feature more clearly.<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">When we look at a scene with both bright and dark details, the human eye must do its own version of "equalize" or we could not see both kinds of features at the same time.

If equalize suppresses details instead of enhancing them, this is analogous to artists using lowered contrast to hide a signature or other detail that the artist did not wish to detract from the art work.

In he case of the PI, I suspect that nature (in the form of the EPH) added boulders and other debris to a finished piece of art. Equalize then operates to emphasize what was there originally (because it was designed to stand out) and suppresses what was added at random (because it wasn't designed to stand out).

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote">Can this same line of thinking be applied to animations which hypothetically change the viewing angle of an image?<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">We have reason to believe that these mesas, if artificial, were intended to be viewed from above. If we have a photo taken from a side view and are able to alter it to an overhead perspective by using height information, that would make it look more like what the eye would see, and passes the test.

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote">can it apply cases where we add "symetricality" to an image, as in the early enhancments that hypothesed on how the "hidden" side of the original Viking images of the Cydonia Face should look?<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">That is an example of making something look less like what the human eye would see. -|Tom|-

<br />I take it you are saying that even though the human eye can see the dark spots, it is legitimate to clean them up somewhat by the "smoothing" process, etc. because that's what the human eye does to some degree anyway, and because this aids us in seeing the feature more clearly.<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">When we look at a scene with both bright and dark details, the human eye must do its own version of "equalize" or we could not see both kinds of features at the same time.

If equalize suppresses details instead of enhancing them, this is analogous to artists using lowered contrast to hide a signature or other detail that the artist did not wish to detract from the art work.

In he case of the PI, I suspect that nature (in the form of the EPH) added boulders and other debris to a finished piece of art. Equalize then operates to emphasize what was there originally (because it was designed to stand out) and suppresses what was added at random (because it wasn't designed to stand out).

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote">Can this same line of thinking be applied to animations which hypothetically change the viewing angle of an image?<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">We have reason to believe that these mesas, if artificial, were intended to be viewed from above. If we have a photo taken from a side view and are able to alter it to an overhead perspective by using height information, that would make it look more like what the eye would see, and passes the test.

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote">can it apply cases where we add "symetricality" to an image, as in the early enhancments that hypothesed on how the "hidden" side of the original Viking images of the Cydonia Face should look?<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">That is an example of making something look less like what the human eye would see. -|Tom|-

Please Log in or Create an account to join the conversation.

18 years 11 months ago #10675

by rderosa

Replied by rderosa on topic Reply from Richard DeRosa

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by tvanflandern</i>

<br />To save bandwidth and to allow the transmission of fewer pixels to Earth, both the number of active channels in the CCD detector in the camera, and the number of grayscale levels, were pruned by a factor of two.

....................

In film photography, the analog of fewer grayscale levels would be a shorter exposure, darkening fewer silver grains. -|Tom|-

<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">

Regarding your first point, how do you know that? Is that info posted somewhere on the MSSS site? Or maybe it's obvious to you from the histogram, and the image.

Regarding your second point, yes that would certainly explain it. I don't want to belabor the point, but that doesn't say much for how seriously the person programming the acquisition parameters took the Public Request. Why bother take it at all?

rd

<br />To save bandwidth and to allow the transmission of fewer pixels to Earth, both the number of active channels in the CCD detector in the camera, and the number of grayscale levels, were pruned by a factor of two.

....................

In film photography, the analog of fewer grayscale levels would be a shorter exposure, darkening fewer silver grains. -|Tom|-

<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">

Regarding your first point, how do you know that? Is that info posted somewhere on the MSSS site? Or maybe it's obvious to you from the histogram, and the image.

Regarding your second point, yes that would certainly explain it. I don't want to belabor the point, but that doesn't say much for how seriously the person programming the acquisition parameters took the Public Request. Why bother take it at all?

rd

Please Log in or Create an account to join the conversation.

18 years 11 months ago #10716

by rderosa

Replied by rderosa on topic Reply from Richard DeRosa

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by tvanflandern</i>

<br />In film photography, the analog of fewer grayscale levels would be a shorter exposure, darkening fewer silver grains. -|Tom|-

<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">

Tom, while following some links in the Cydonia discussion, I came across this quote from you, on Slide 5, "High-Pass Filter":

"When considering why this happened, we are left with an unhappy choice between dishonesty and incompetence. "

[url] www.metaresearch.org/solar%20system/cydo...act_html/default.htm [/url]

I think that's an appropriate conclusion to draw, and it's the same conclusion that I've been driving at here, regarding my comparison of M03 and R12. I recently said something very similar to Neil: it doesn't really have to be conspiracy, it could just be incompetence. Perhaps the people assigned to "Public Request" imaging aren't exactly "top-notch" employees. It's been my experience for many years, that in the world of high-technology it's quite common to have to go through 2 or 3 "tiers" before you get to the people who REALLY know stuff. The system is designed that way, by necessity. The first few levels are really just reading from checklists, and have no real domain knowledge of their own. The same is true for assignments. Since, as you say, JPL is not exactly on board with this whole artificiality theme, it stands to reason they're not exactly putting their best people on public request imaging.

It could just be a bandwith issue like you said, but I suspect there's more to it than that.

rd

<br />In film photography, the analog of fewer grayscale levels would be a shorter exposure, darkening fewer silver grains. -|Tom|-

<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">

Tom, while following some links in the Cydonia discussion, I came across this quote from you, on Slide 5, "High-Pass Filter":

"When considering why this happened, we are left with an unhappy choice between dishonesty and incompetence. "

[url] www.metaresearch.org/solar%20system/cydo...act_html/default.htm [/url]

I think that's an appropriate conclusion to draw, and it's the same conclusion that I've been driving at here, regarding my comparison of M03 and R12. I recently said something very similar to Neil: it doesn't really have to be conspiracy, it could just be incompetence. Perhaps the people assigned to "Public Request" imaging aren't exactly "top-notch" employees. It's been my experience for many years, that in the world of high-technology it's quite common to have to go through 2 or 3 "tiers" before you get to the people who REALLY know stuff. The system is designed that way, by necessity. The first few levels are really just reading from checklists, and have no real domain knowledge of their own. The same is true for assignments. Since, as you say, JPL is not exactly on board with this whole artificiality theme, it stands to reason they're not exactly putting their best people on public request imaging.

It could just be a bandwith issue like you said, but I suspect there's more to it than that.

rd

Please Log in or Create an account to join the conversation.

18 years 11 months ago #15235

by jrich

Replied by jrich on topic Reply from

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by tvanflandern</i>

<br /><blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by neilderosa</i>

<br />by "erasing" (as it were) some, or even many, of the dark spots in the "smoothing" or "noise reduction" process, you are in effect "erasing" data which would later appear as shiny spots in another image, and you are thus confusing the issue by tampering with the data.<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">But the human eye does the same thing. Some studies will want to preserve what the spacecraft sees that a human cannot see. But for purposes of evaluating hypothetical artistic images, we surely do want to see what the human eye would see if it were there. -|Tom|-<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">Tom, what is the justification for assuming that any artifacts that might exist were designed and meant to be viewed at the same visual range as our human eyes? Flowers are very striking and colorful to our eyes, but they are meant to be seen by birds and insects with visual ranges sometimes far different than ours and are perceived much differently by them with some features that are almost imperceptable to us being unambiguous and highy distict beacons to them. Perhaps at infrared or ultraviolet wavelengths the features that everyone seems to be trying so hard to find are more evident. Or perhaps the features that some believe they see are not evident at all at other ranges. This seems a non-trivial issue to me.

JR

<br /><blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by neilderosa</i>

<br />by "erasing" (as it were) some, or even many, of the dark spots in the "smoothing" or "noise reduction" process, you are in effect "erasing" data which would later appear as shiny spots in another image, and you are thus confusing the issue by tampering with the data.<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">But the human eye does the same thing. Some studies will want to preserve what the spacecraft sees that a human cannot see. But for purposes of evaluating hypothetical artistic images, we surely do want to see what the human eye would see if it were there. -|Tom|-<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">Tom, what is the justification for assuming that any artifacts that might exist were designed and meant to be viewed at the same visual range as our human eyes? Flowers are very striking and colorful to our eyes, but they are meant to be seen by birds and insects with visual ranges sometimes far different than ours and are perceived much differently by them with some features that are almost imperceptable to us being unambiguous and highy distict beacons to them. Perhaps at infrared or ultraviolet wavelengths the features that everyone seems to be trying so hard to find are more evident. Or perhaps the features that some believe they see are not evident at all at other ranges. This seems a non-trivial issue to me.

JR

Please Log in or Create an account to join the conversation.

- tvanflandern

-

- Offline

- Platinum Member

-

Less

More

- Thank you received: 0

18 years 11 months ago #17279

by tvanflandern

Replied by tvanflandern on topic Reply from Tom Van Flandern

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote"><i>Originally posted by jrich</i>

<br />what is the justification for assuming that any artifacts that might exist were designed and meant to be viewed at the same visual range as our human eyes?<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">You raise an interesting question, but I see it as unconnected with the discussion so far, and in particular with the two comments you quoted. We were not discussing the range of wavelengths accessible to human vision, but rather the eye's ability to squint or dilate so as to adjust itself for lighting and contrast. The comments made were about comparing our eye's abilities with the spacecraft camera CCD's abilities. Clearly, for our experience and visual analysis abilities to kick in, we want to know what our own eyes would see if we were at Mars.

As for your point about wavelength sensitivity, we think that evolution made our eyes most sensitive to the wavelength range where our Sun gives off the most light. The same would be true for other species that evolved in our solar system with our unmodified Sun as their primary light source. Obviously, nocturnal or amphibious creatures or those who do not depend on light to find food would evolve in different ways. But beings on Mars, or who evolved on a parent world near Mars, would very likely have the same wavelength sensitivity as we do because they would have had the same primary light source. -|Tom|-

<br />what is the justification for assuming that any artifacts that might exist were designed and meant to be viewed at the same visual range as our human eyes?<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">You raise an interesting question, but I see it as unconnected with the discussion so far, and in particular with the two comments you quoted. We were not discussing the range of wavelengths accessible to human vision, but rather the eye's ability to squint or dilate so as to adjust itself for lighting and contrast. The comments made were about comparing our eye's abilities with the spacecraft camera CCD's abilities. Clearly, for our experience and visual analysis abilities to kick in, we want to know what our own eyes would see if we were at Mars.

As for your point about wavelength sensitivity, we think that evolution made our eyes most sensitive to the wavelength range where our Sun gives off the most light. The same would be true for other species that evolved in our solar system with our unmodified Sun as their primary light source. Obviously, nocturnal or amphibious creatures or those who do not depend on light to find food would evolve in different ways. But beings on Mars, or who evolved on a parent world near Mars, would very likely have the same wavelength sensitivity as we do because they would have had the same primary light source. -|Tom|-

Please Log in or Create an account to join the conversation.

- neilderosa

-

- Offline

- Platinum Member

-

Less

More

- Thank you received: 0

18 years 10 months ago #15881

by neilderosa

Replied by neilderosa on topic Reply from Neil DeRosa

<blockquote id="quote"><font size="2" face="Verdana, Arial, Helvetica" id="quote">quote:<hr height="1" noshade id="quote">Originally posted by Phoenix VII

I take it as north is up on these pictures

What I was trying to say earlier was that isn’t it strange that the faces in fact are like you expect faces to be, with their hair up and neck down.

<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">

Since no one picked up on Tom's cue to do a project to answer this question, I made a little foray myself. The MSSS aquisition parameters define North Azimuth as follows:

"In a raw or unprocessed MOC image, this is the angle in degrees clockwise from a line drawn from the center to the right edge of the image to the direction of the north pole of Mars. This number allows the user to determine "which way is north/south? and which way is east/west?""

I take "unprocessed" to mean "not map projected." The following two images are of M0203051, the "Crownface," and the other is of the M03 Profile Image. As you can see, north is not always "up" for these faces. I take "center to right edge," to mean a horizonal line perpendicular to the left and right edge. I confirm that I'm in the "ballpark" three ways; by comparing the result to the Mercader projected (Map Projected) image where north is (more or less) "up," by comparing to the context image when there is one, by looking at the shadows relative to the time of day. You could also compare the image to the MOLA map and match landmarks:

(<u><b>Note</b></u>: For the PI, I had to rotate the "not map projected" image 180 deg. so you could see the profile, and that's why the horozontal is going CW from the left side of the strip instead of the right. The Crownface actually appears in this upright orientation in the "not map projected" image.)

M0203051

M0305549

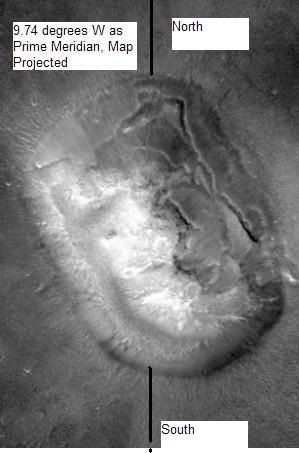

And here's the Cydonia Face (E170104). Since this "not mapped projected" image has been "messed with" in some small way, (it's not inverted or flipped, or rotated 180 deg., but merely "normal" but in a straight-up strip). I'm using deduction to tell me which way to take off from for my north indicating angle.

And paying attention attention to Tom's advice from another post, here's the E17 Face again cropped and straightened upright from the Map Projected jpg. As you can see, the two methods get you fairly close. It shouldn't have to be this hard, but there you have it. (Note 9.74 deg. W Longitude is the center of image.)

Neil [ ]

]

I take it as north is up on these pictures

What I was trying to say earlier was that isn’t it strange that the faces in fact are like you expect faces to be, with their hair up and neck down.

<hr height="1" noshade id="quote"></blockquote id="quote"></font id="quote">

Since no one picked up on Tom's cue to do a project to answer this question, I made a little foray myself. The MSSS aquisition parameters define North Azimuth as follows:

"In a raw or unprocessed MOC image, this is the angle in degrees clockwise from a line drawn from the center to the right edge of the image to the direction of the north pole of Mars. This number allows the user to determine "which way is north/south? and which way is east/west?""

I take "unprocessed" to mean "not map projected." The following two images are of M0203051, the "Crownface," and the other is of the M03 Profile Image. As you can see, north is not always "up" for these faces. I take "center to right edge," to mean a horizonal line perpendicular to the left and right edge. I confirm that I'm in the "ballpark" three ways; by comparing the result to the Mercader projected (Map Projected) image where north is (more or less) "up," by comparing to the context image when there is one, by looking at the shadows relative to the time of day. You could also compare the image to the MOLA map and match landmarks:

(<u><b>Note</b></u>: For the PI, I had to rotate the "not map projected" image 180 deg. so you could see the profile, and that's why the horozontal is going CW from the left side of the strip instead of the right. The Crownface actually appears in this upright orientation in the "not map projected" image.)

M0203051

M0305549

And here's the Cydonia Face (E170104). Since this "not mapped projected" image has been "messed with" in some small way, (it's not inverted or flipped, or rotated 180 deg., but merely "normal" but in a straight-up strip). I'm using deduction to tell me which way to take off from for my north indicating angle.

And paying attention attention to Tom's advice from another post, here's the E17 Face again cropped and straightened upright from the Map Projected jpg. As you can see, the two methods get you fairly close. It shouldn't have to be this hard, but there you have it. (Note 9.74 deg. W Longitude is the center of image.)

Neil [

Please Log in or Create an account to join the conversation.

Time to create page: 2.024 seconds